Automate — extract from Agreenculture's solutions video © Agreenculture

CEOL's buttons panel

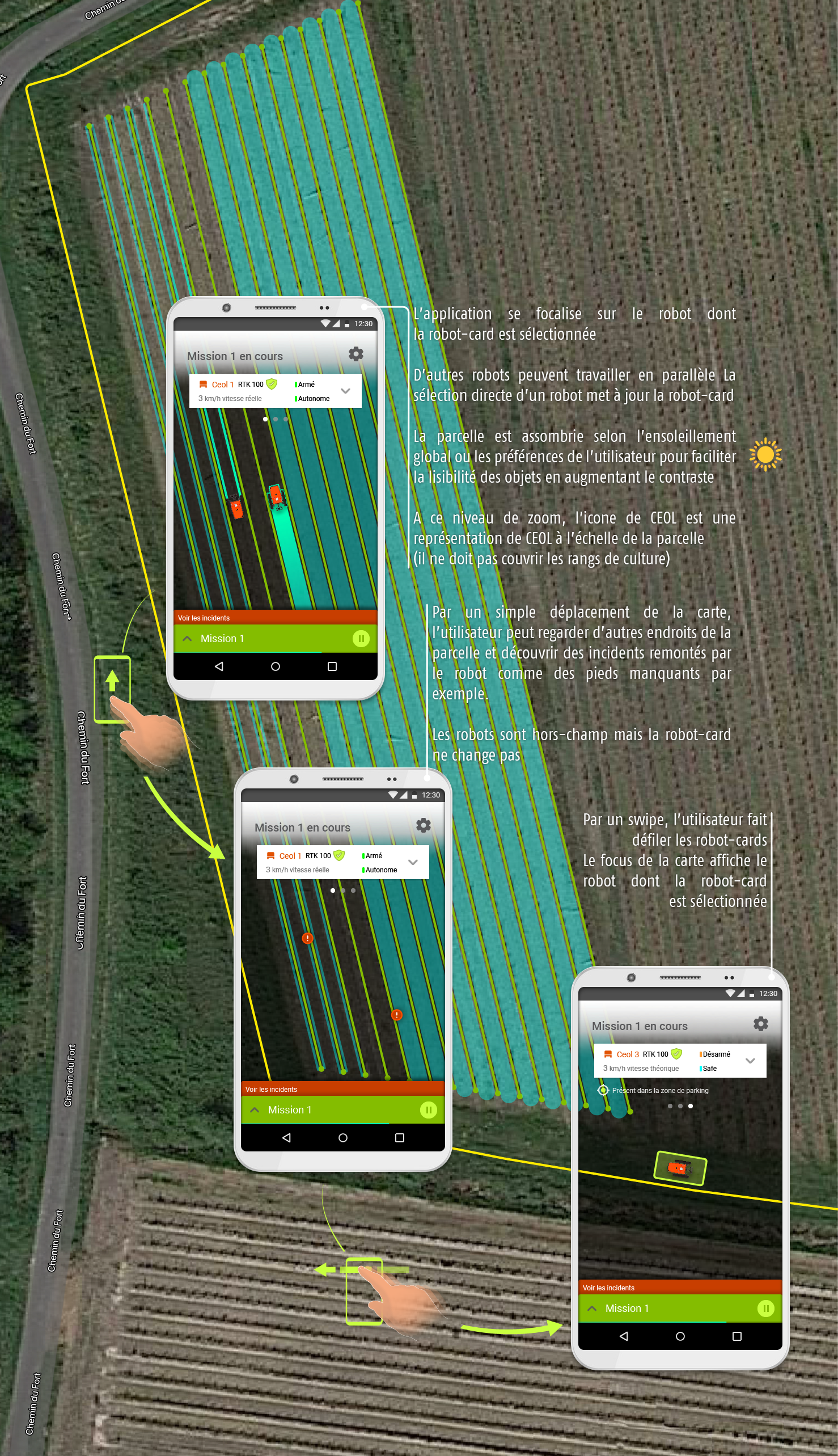

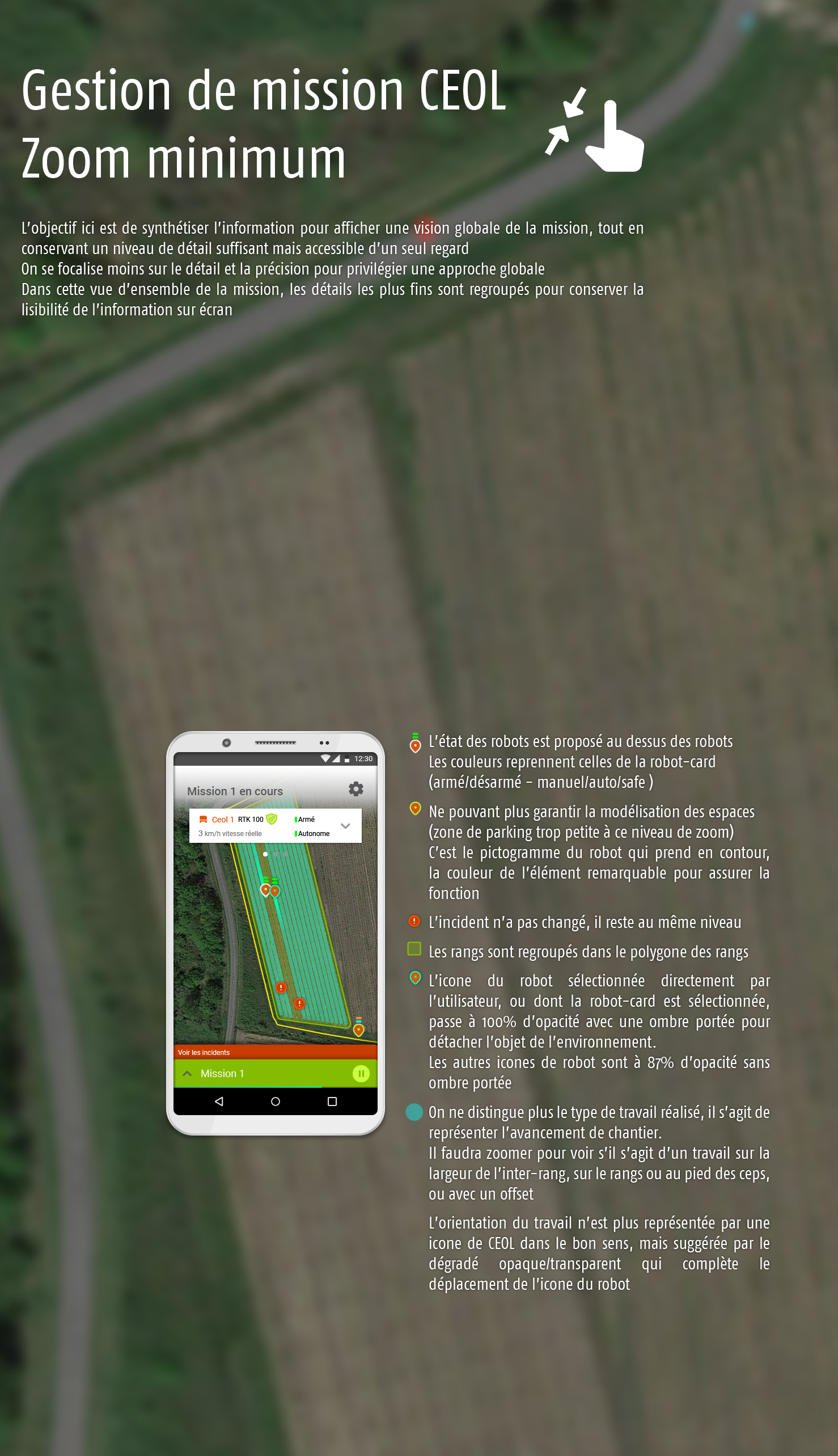

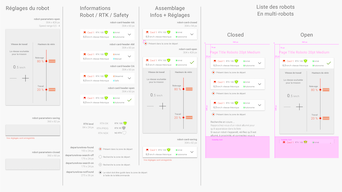

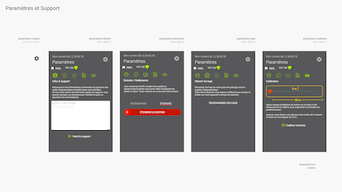

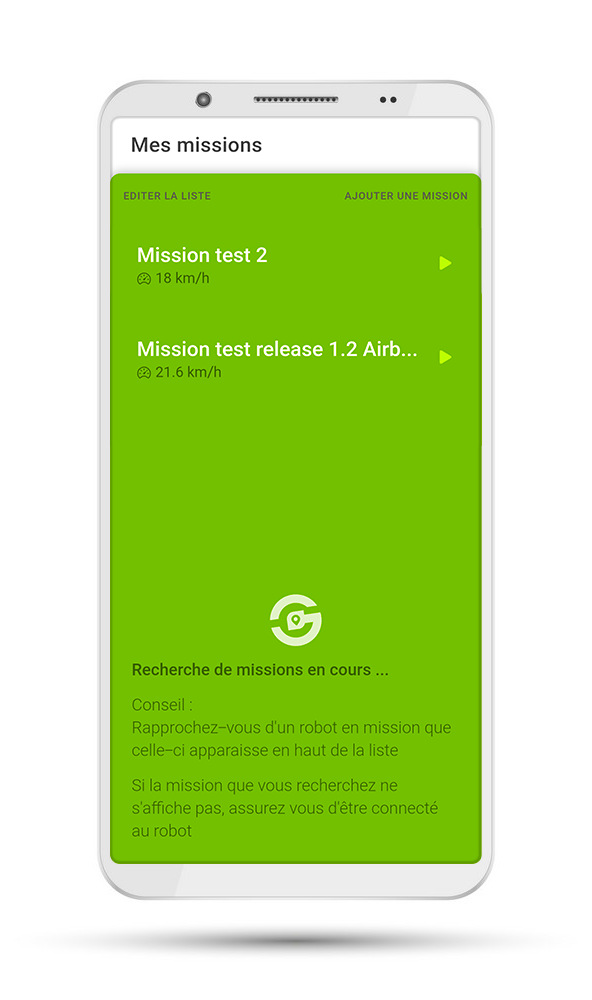

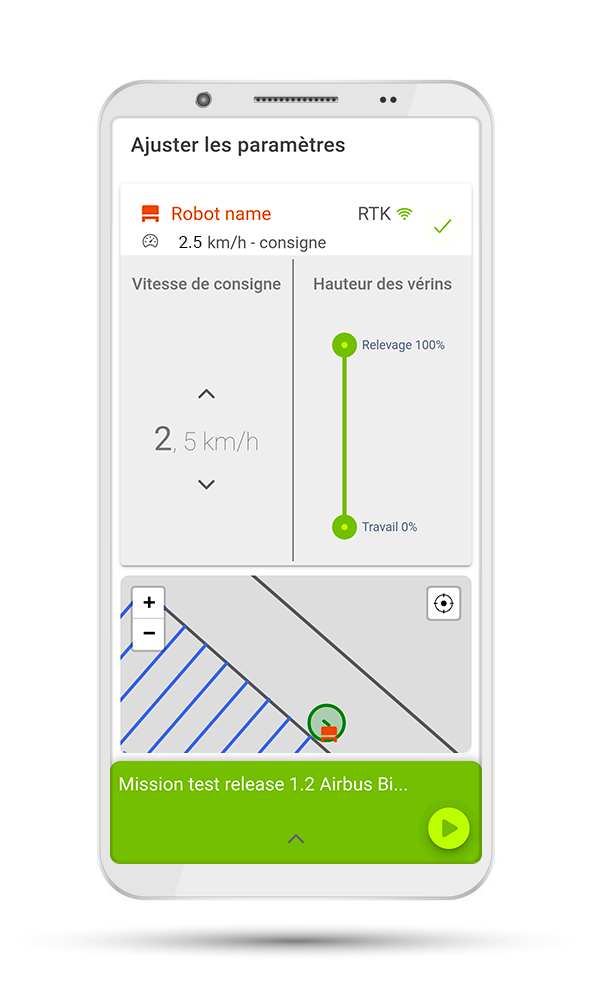

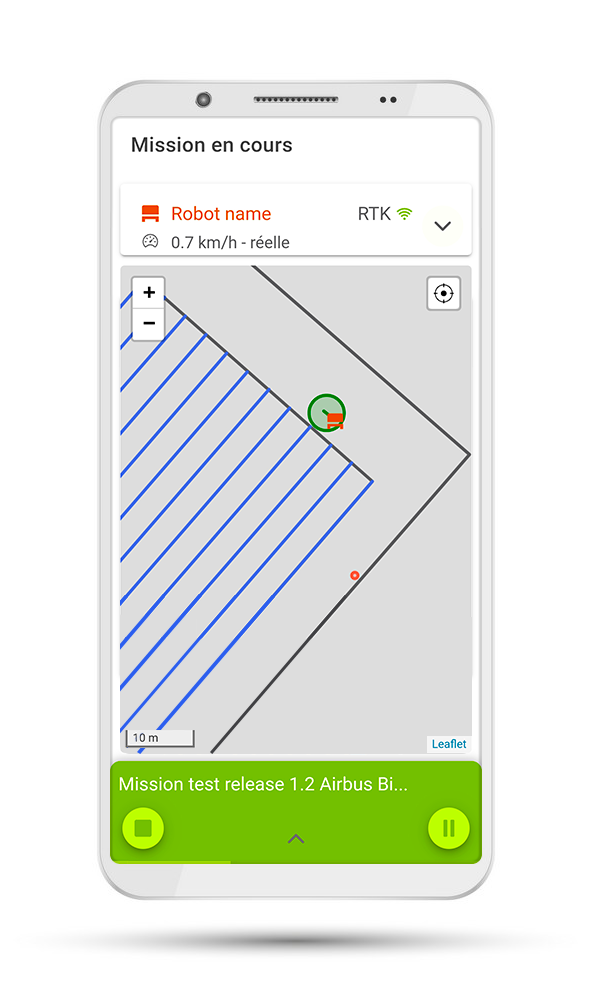

CEOL's Monitoring Application

operator running after the robot

operator holds the tablet with both hands

tablet after passing under the tractor tracks

If we want our products to be adopted, the first best practice is to take this context into account, as it is already codified and offers solutions for problems similar to those we wish to tackle.

(left) iOS weather app © Apple - (right) Launching trip to get ETA and navigation instructions with Waze ©Waze

Mind-mapping is easy to setup and maintain in industrial conditions, where time matters.

After several iterations it becomes a synthesis of all the user stories

complete screen flow of CEOL's mission management application

Example of animated design mockup with a very early version of the app

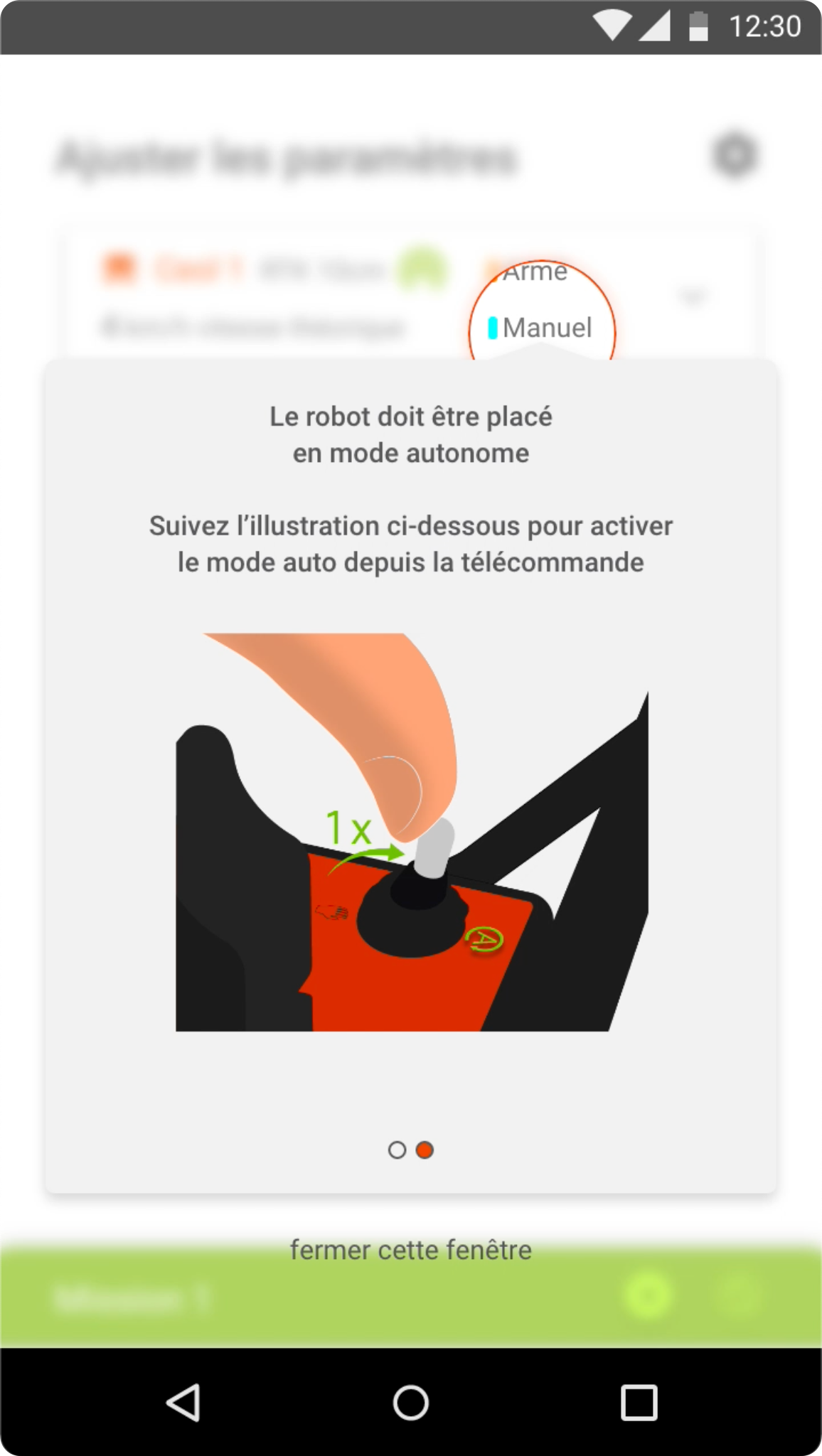

reminder to use panel button on the robot to set machine on "armed" status

reminder to use "auto" switch on the remote controller, to set machine on "auto" status in order to release the robot

presentation of the application design and user path to future users © Agreenculture

sequence filmed by drone during a demonstration ©Agreenculture

During testings sessions or demonstrations with final users, we put the mobile application in their hands. © Agreenculture

Video of actual product in 2021 — extract from FIRA 2021 Demonstration movie © Agreenculture